AR-assisted navigation of a mobile robot actuated by an SSVEP-based BCI

Brain-controlled robot navigation with augmented reality feedback

AR-assisted Navigation of a Mobile Robot Controlled by an SSVEP-based BCI

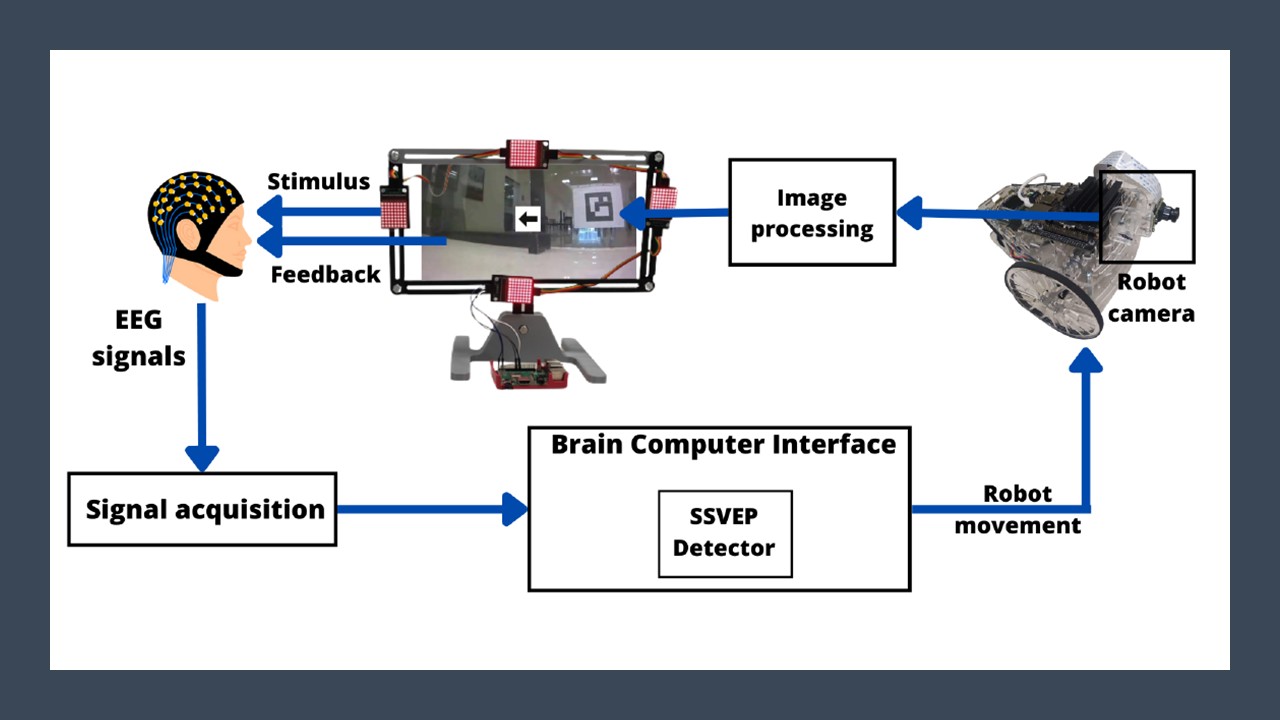

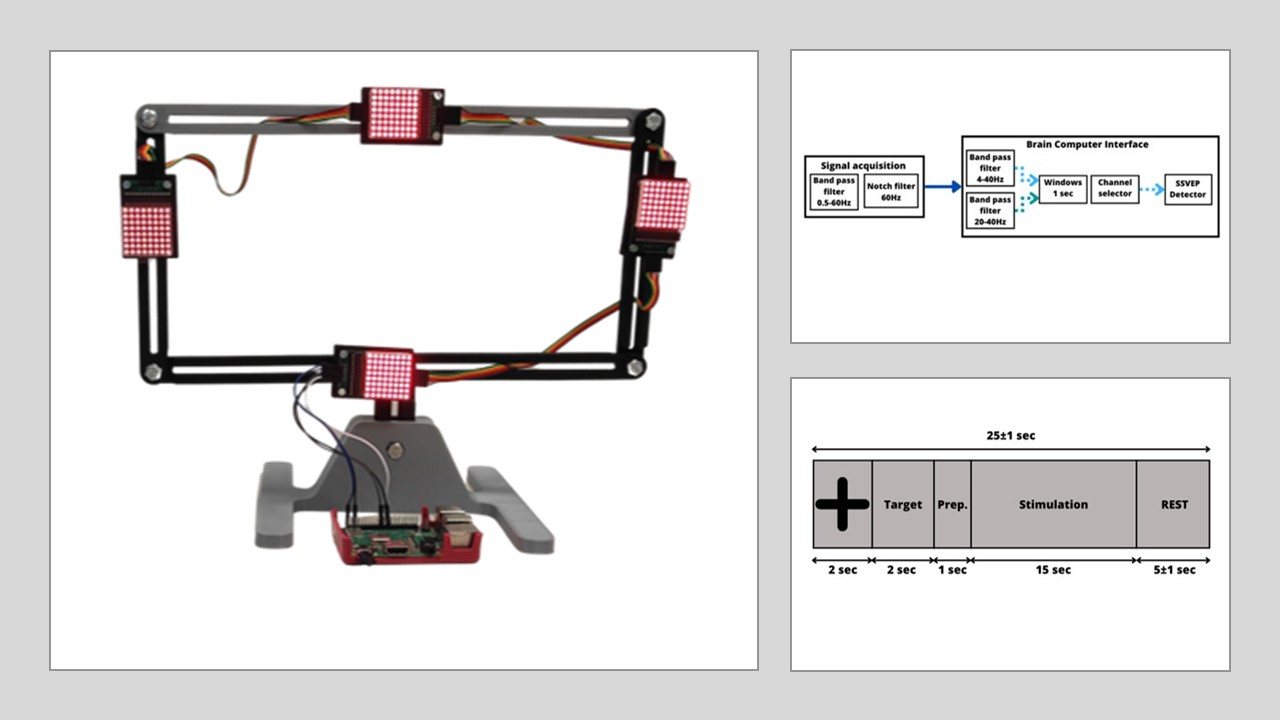

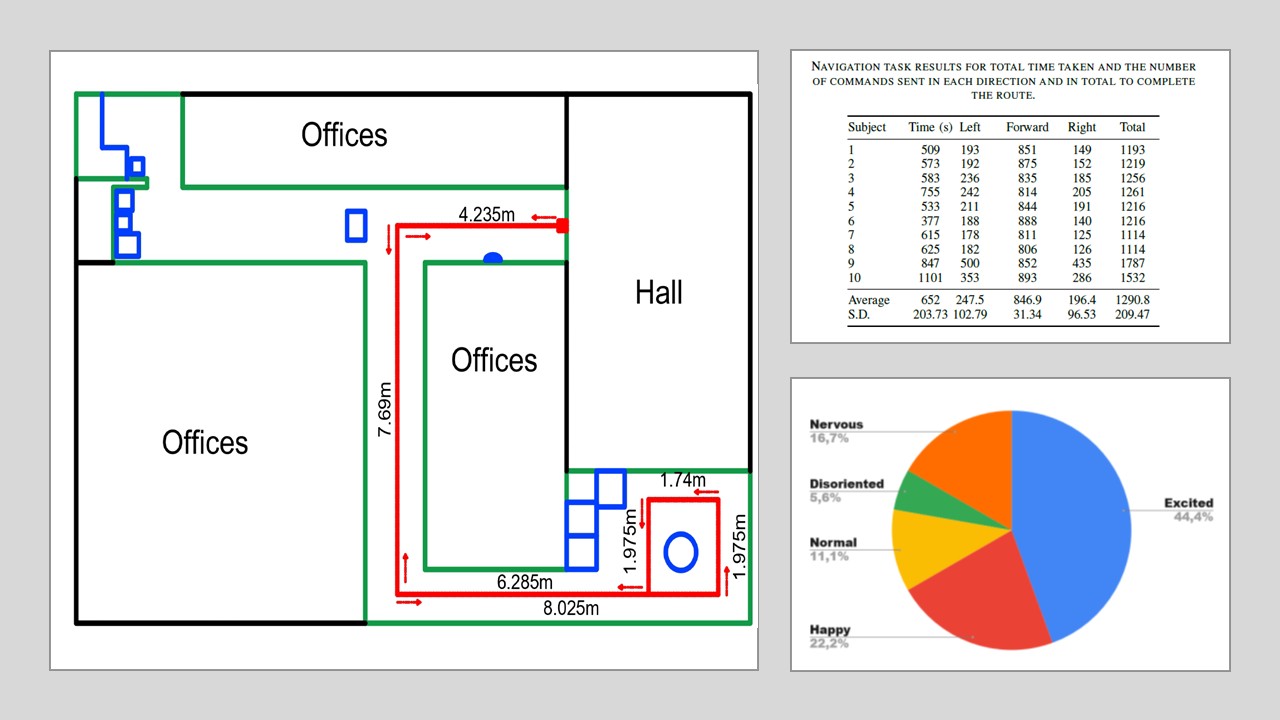

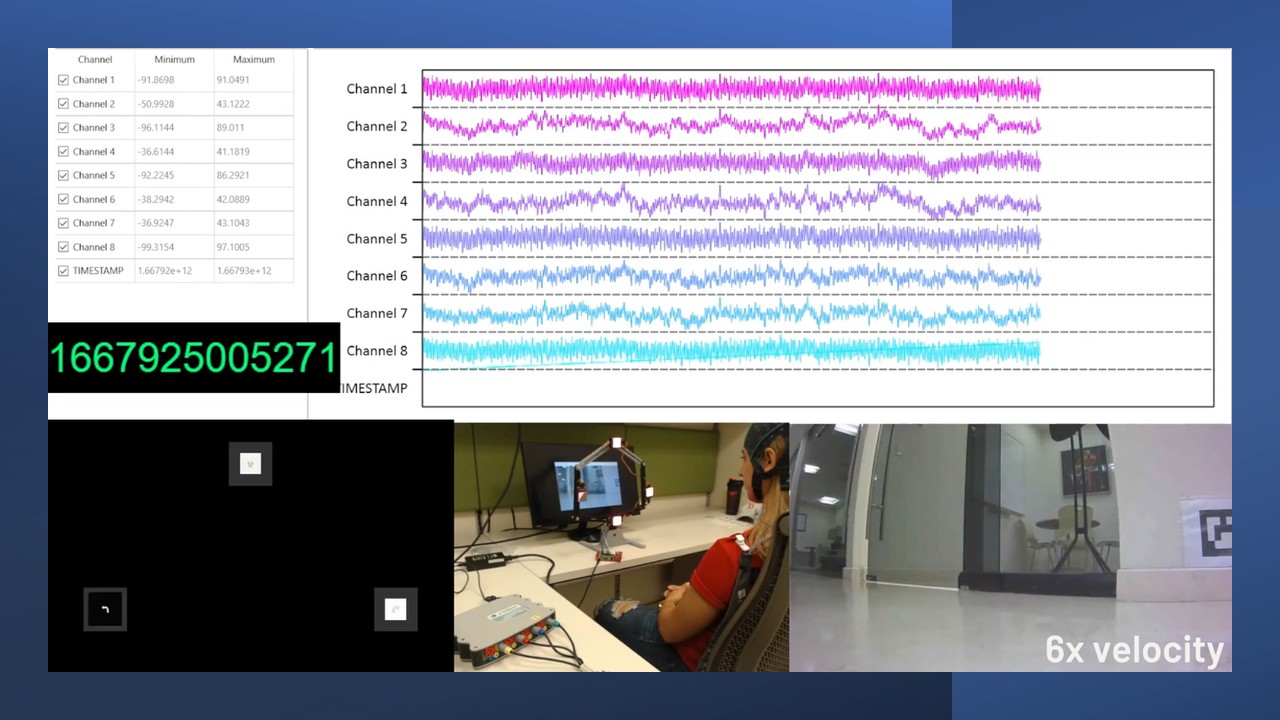

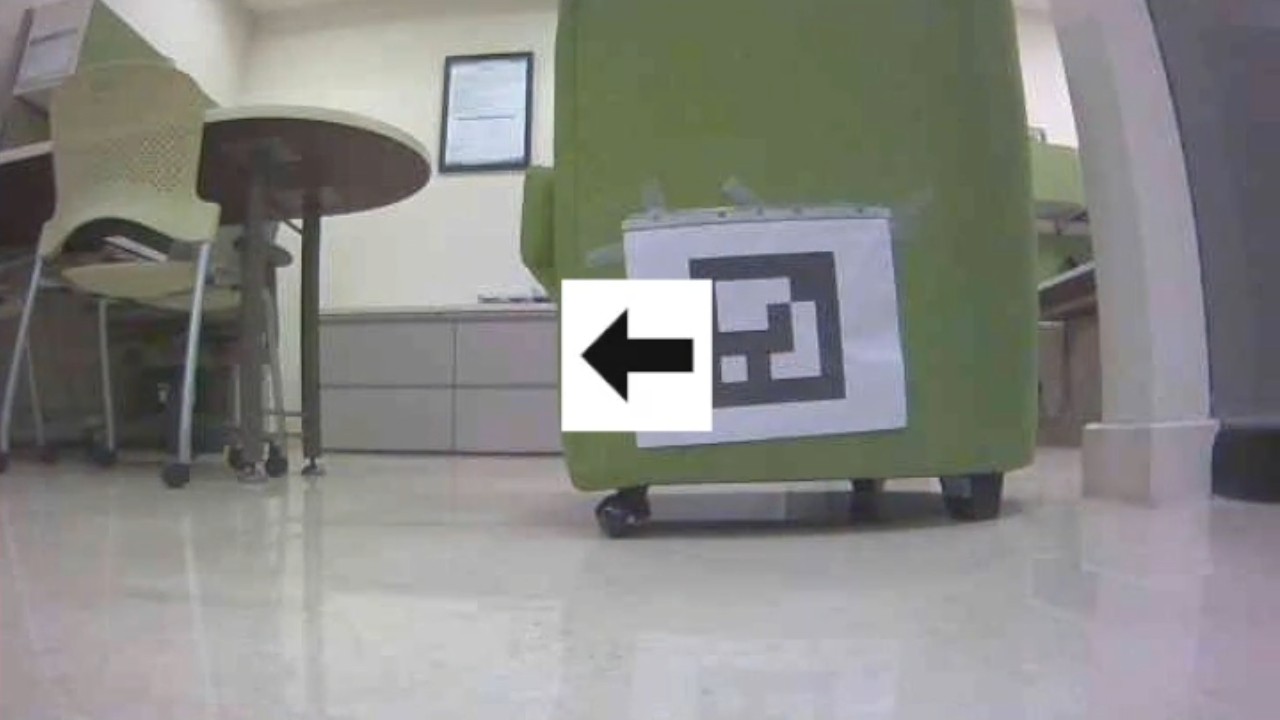

In this project, we developed a system that uses a Steady-State Visual Evoked Potential (SSVEP)-based Brain-Computer Interface (BCI) to control a mobile robot. The system allows for active navigation by continuously sending commands to move the robot when a specific command is detected by the BCI. To control the robot, we used three LEDs as visual stimuli (presented in a screen in front of the uder), each corresponding to a different movement: turning left, moving forward, and turning right. A fourth LED was used as a reference to indicate when the user was not sending a command, allowing the robot to stop. The robot was equipped with a camera to live stream its perspective to the user. This live stream was enhanced with Augmented Reality (AR) using ArUco markers, which provided the user with real-time guidance and directions. The goal in this project was to integrate and evaluate the system's usability in real-world scenarios with healthy participants.

System Functionality

- Visual Stimuli: Three LEDs as visual stimuli (left, forward, right)

-

Movement Control:

- Left LED triggers left turn

- Center LED triggers forward movement

- Right LED triggers right turn

- AR Feedback: Live stream enhanced with ArUco markers

- Reference Command: Fourth LED indicates no command (robot stops)

Project Highlights

- Technology: SSVEP-BCI + AR

- Application: Mobile robot navigation

- Key Feature: Real-time AR guidance

Technical Details

System Components

- SSVEP-BCI System

- Visual Stimuli Interface

- Mobile Robot Platform

- AR Guidance System